EasyV2V: A High-quality Instruction-based Video Editing Framework

Dec 6, 2025·,,,,,,,·

3 mins read

Jinjie Mai

Chaoyang Wang

Guocheng Gordon Qian

Willi Menapace

Sergey Tulyakov

Bernard Ghanem

Peter Wonka

Ashkan Mirzaei

Abstract

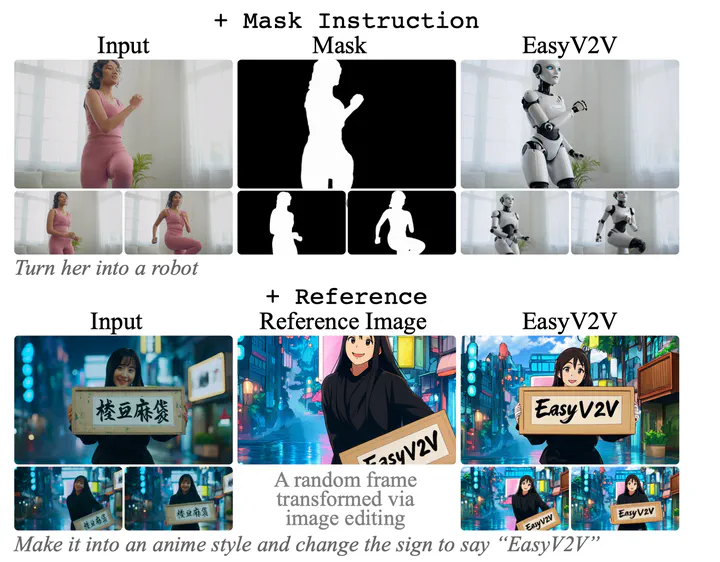

While image editing has advanced rapidly, video editing remains less explored, facing challenges in consistency, control, and generalization. We study the design space of data, architecture, and control, and introduce EasyV2V, a simple and effective framework for instruction-based video editing. On the data side, we compose existing experts with fast inverses to build diverse video pairs, lift image edit pairs into videos via single-frame supervision and pseudo pairs with shared affine motion, mine dense-captioned clips for video pairs, and add transition supervision to teach how edits unfold. On the model side, we observe that pretrained text-to-video models possess editing capability, motivating a simplified design. Simple sequence concatenation for conditioning with light LoRA fine-tuning suffices to train a strong model. For control, we unify spatiotemporal control via a single mask mechanism and support optional reference images. Overall, EasyV2V works with flexible inputs, e.g., video+text, video+mask+text, video+mask+reference+text, and achieves state-of-the-art video editing results, surpassing concurrent and commercial systems. Code and data will be released upon approval.

Type

Publication

arXiv preprint

Code and data will be released soon upon approval.